|

Xunzhe Zhou I am Year-1 PhD student at HKU IDS, supervised by Prof. Yanchao Yang and Prof. Yi Ma. I received my Bachelor's degree in Computer Science at Fudan University. Previously, I interned at Shanghai AI Lab, mentored by Dr. Biqing Qi and Dr. Yan Ding. Before that, I have worked on open-world mobile manipulation with Prof. Lin Shao from NUS, Prof. Xiangyang Xue and Prof. Yanwei Fu from Fudan. I also have active connection with Prof. Yanghua Xiao and Prof. Siyang Leng. I exchanged at UC Berkeley during 2023 Fall, with GPA 4.0/4.0 I am actively looking for interns and collaborators, feel free to contact me if interested! Email / CV(2025.10) / GitHub / Scholar / WeChat |

|

News

Selected Papers

* denotes equal contribution. Representative papers are highlighted.

|

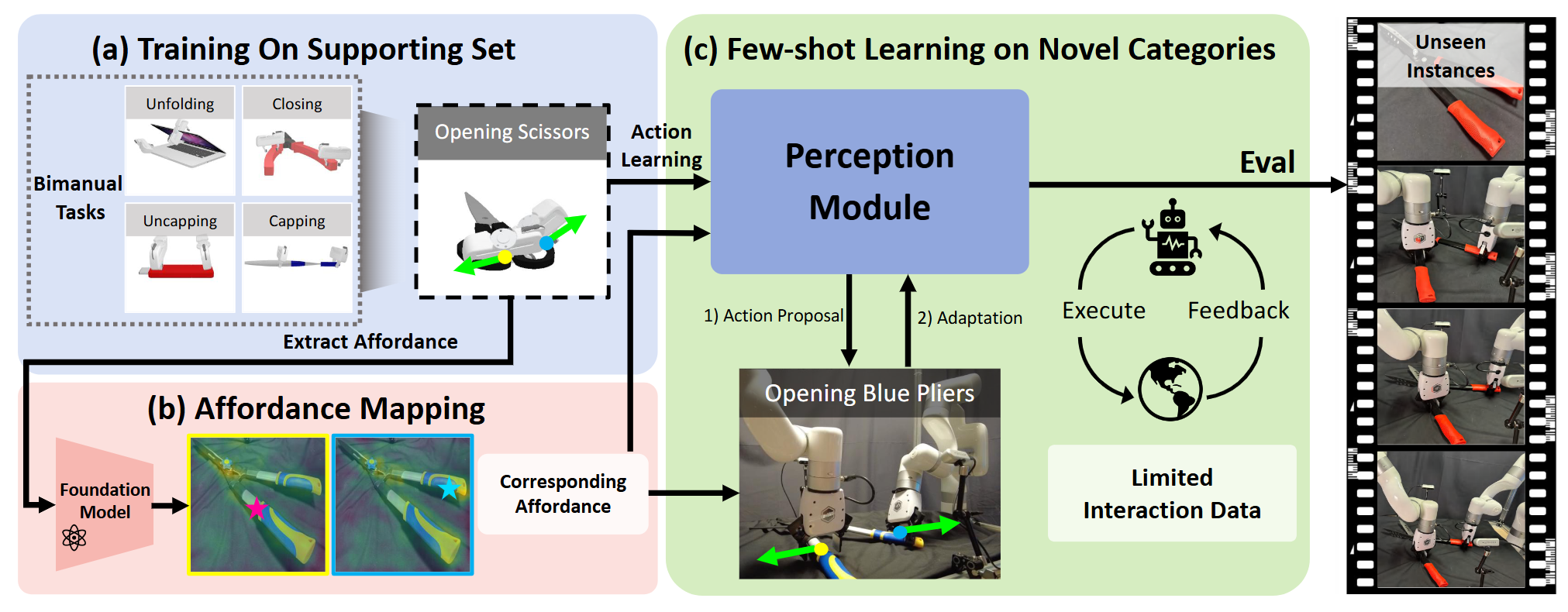

Bi-Adapt: Few-shot Bimanual Adaptation for Novel Categories of 3D Objects via Semantic Correspondence Jinxian Zhou, Ruihai Wu, Yiwei Liu, Yiwen Hou, Xunzhe Zhou, Checheng Yu, Licheng Zhong, Lin Shao

In submission We present Bi-Adapt, a novel framework designed for efficient learning of generalizable bimanual manipulation. It first learns point-level action on the supporting set for different bimanual tasks, then it predicts actions on novel categories based on the foundation-model-guided affordance, enabling cross-category generalization after few-shot adaptation. |

|

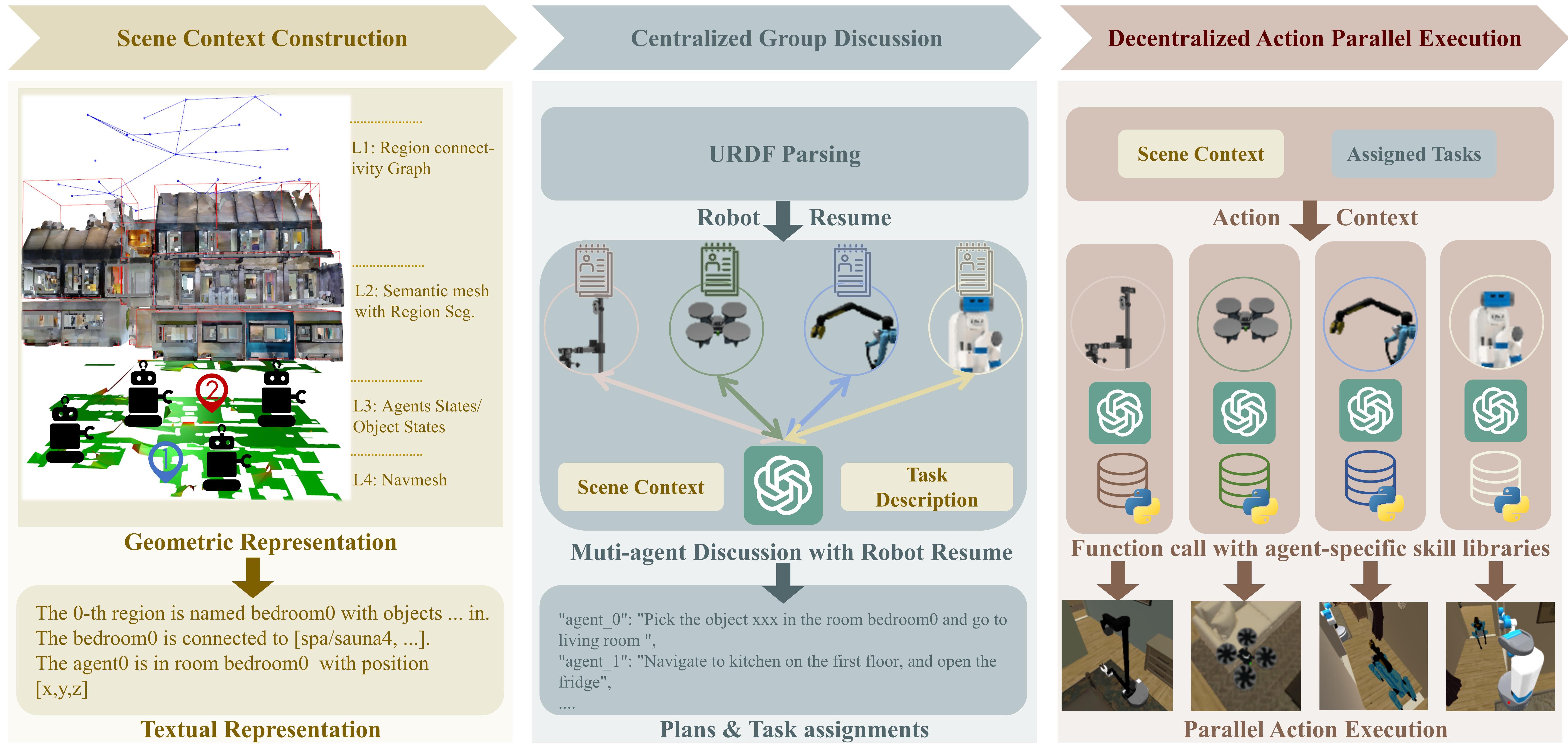

EMOS: Embodiment-aware Heterogeneous Multi-robot Operating System with LLM Agents Junting Chen*, Checheng Yu*, Xunzhe Zhou*, Tianqi Xu, Yao Mu, Mengkang Hu, Wenqi Shao, Yikai Wang, Guohao Li, Lin Shao

International Conference on Learning Representations (ICLR), 2025 We introduced a multi-agent framework EMOS to improve the collaboration among heterogeneous robots with varying embodiment capabilities. To evaluate how well our MAS performs, we designed Habitat-MAS benchmark, including four tasks: 1) navigation, 2) perception, 3) manipulation, and 4) comprehensive multi-floor object rearrangement. |

|

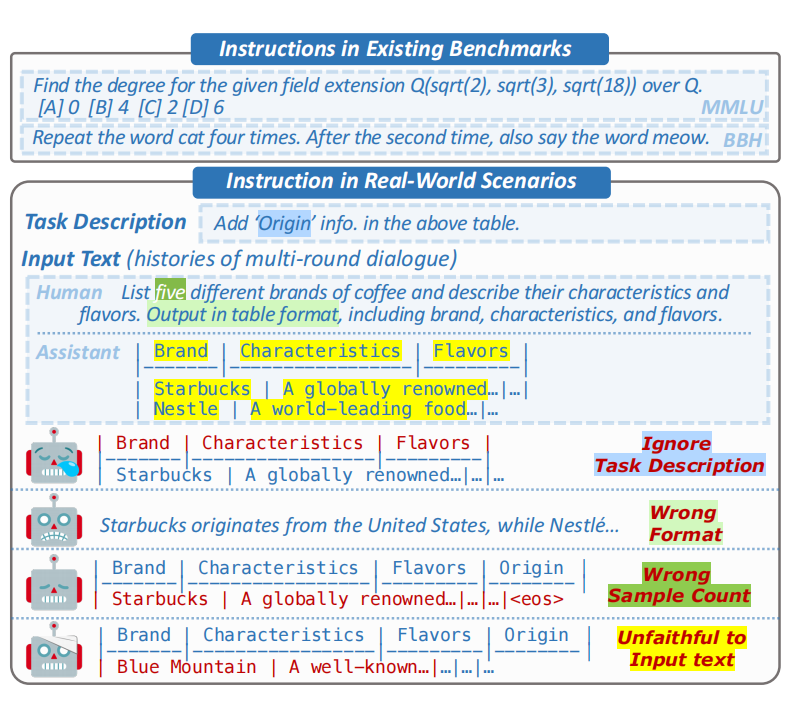

Can Large Language Models Understand Real-World Complex Instructions? Qianyu He, Jie Zeng, Wenhao Huang, Lina Chen, Jin Xiao, Qianxi He, Xunzhe Zhou, Lida Chen, Xintao Wang, Yuncheng Huang, Haoning Ye, Zihan Li, Shisong Chen, Yikai Zhang, Zhouhong Gu, Jiaqing Liang, Yanghua Xiao

AAAI Conference on Artificial Intelligence (AAAI), 2024 We propose CELLO, a benchmark for evaluating LLMs' ability to follow complex instructions. We design a real-world dataset carefully crafted by human experts with 566 samples and 9 tasks. We also established 4 criteria and corresponding metrics and compared 18 Chinese-oriented models and 15 English-oriented models. |